14.10.2023. Zurich

1001 IN 1 NIGHT;

RECYCLING STORIES AT LIGHT SPEED ♻️📚🚀

BY ASS. PROF. DR. MIRO ROMAN, AGOSTINO NICKL, AND ADIL BOKHARI. CURATED BY LATHOUSE. EXHIBITED AT ZENTRALWÄSCHEREI IN ZÜRICH, SWITZERLAND. THIS PROJECT IS A COLLABORATION BETWEEN METEORA (ETH ZURICH) AND STUDIO0MORE (UIBK INNSBRUCK).

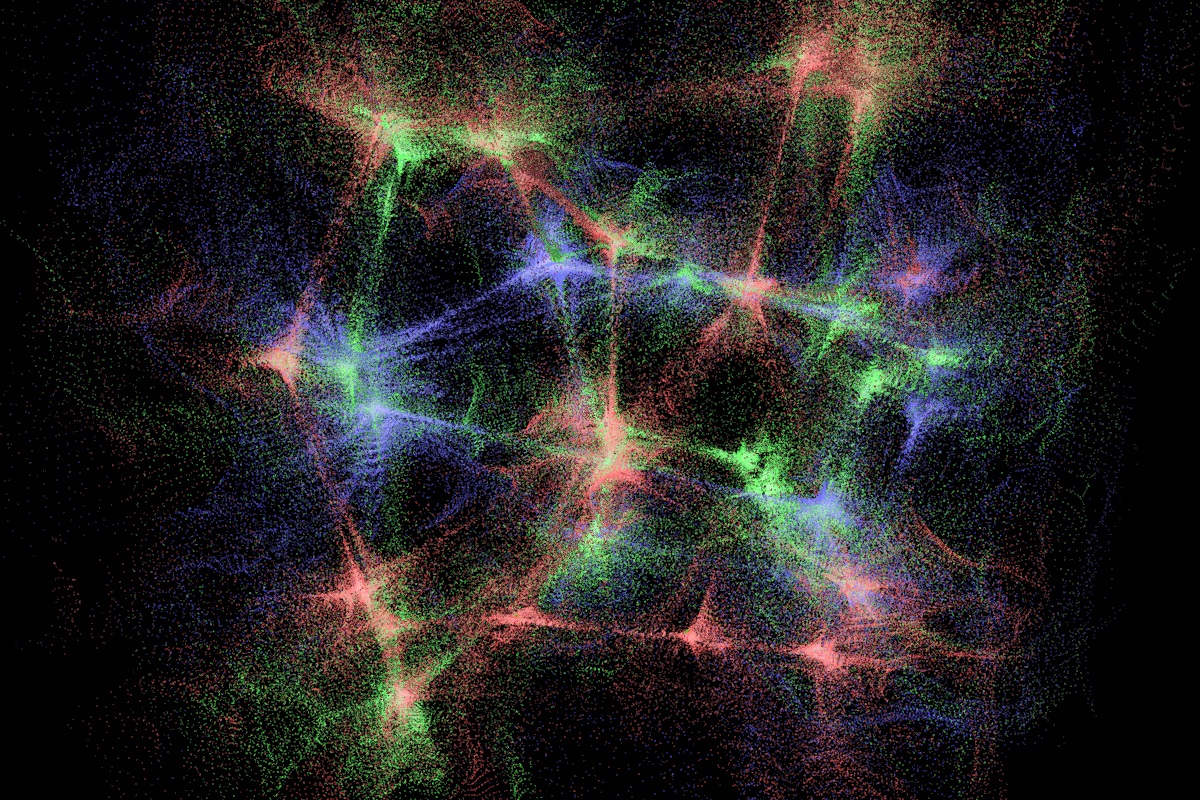

THIS PROJECT PUTS INTO RELATION ARTIFICIAL GENERAL INTELLIGENCE (AGI) WITH ARTIFICIAL PERSONAL INTELLIGENCE (API). IT ENHANCES THE LATENT LOGISTICS OF COMMON SENSE IN AGI USING UNIQUE DATA AND API. OUR APIS ARE LIKE PRISMS, DISCRETIZING THE GENERIC KNOWLEDGE CONTAINED IN LLMS. IN OTHER WORDS, THE CAREFULLY CRAFTED OPTICS OF CUSTOM DATABASES ALTER THE PROBABILISTIC PREDICTIONS OF GENERIC LLMS.

1001 in 1 is an exhibition that plays with big data as if it were Big Macs. It writes using ChatGPT and renders with Midjourney. It brings artificial general intelligence into relation with custom-made search engines. It moves as fast as fast food and feels as light as Coca-Cola Light. No sugar added, just artificial sweeteners. It aims to explore and establish an attitude toward Large Language Models and Text-to-Image Generative Models while reading books and eating popcorn.

1001 in 1 was exclusively rendered into an exhibition for just one night on October 14, 2023, at Zentralwäscherei in Zürich. It was produced and conceptualized by Meteora++; Adil Bokhari, Agostino Nickl, and Miro Roman, and would not have been possible without the help and support of Guo Zifeng and Jorge Orozco. It was curated by Luck from Lathouse; Michel Kessler and Alessia Bertini. The closing act of the night was a magnificent play performed by Xlat.xo, with Lewis Beauchamp and Maurus Wirth. It was supported by ETH and UIBK.

1001 in 1 works fast, with the slow rituals of folklore. It plays lightly, with the gravitas of history. It sculpts characters, with the all-knowing genericness of big data.

🔗 READTHE FULL ARTICLE FROM TRANS MAGAZINE

🔗 1001 nights rewritten by METEORA++

ARCHITECTONICS OF LANGUAGE

The context of this research project was an effort to establish a general, model-oriented perspective towards computation. Its aim in this respect was to extend and generalize the knowledge about modelling by learning from other fields in order to compare and contrast this knowledge within the established paradigms in architecture. This particular project examined the modelling approaches applied in the theories of natural language. Major references in this context that are concerned with the architectonics of language point to the early work of Noam Chomsky, and the collection of lectures from Ferdinand de Saussure published by his students. Chomsky's early work explicated in Syntactic Structures (1957), albeit very influential in computer science, has a limited value to architectural modelling in terms of the applied architectonics. It is in fact a direct application of principles derived from Hilbert's work on formal systems in the 1920s. Such syntax-driven perspective is already well established in the modelling of architecture, and includes shape grammars, l-systems, subdivision surfaces, etc. Saussure's work proved more valuable insights into the structure of language, but the notion of architectonics was not explicit enough to make it sufficient for an extended study.

The work of a Danish linguist Louis Hjelmslev (1899–1965) proved to be the most valuable resource for studying language on a formal, scientific basis. His major ambition was to provide a self-sufficient, scientific basis for the theory of language. In order to do so, he found it necessary to rethink the very notions of theory and science. In his most important work, Prolegomena to a theory of Language (1943), he set a path for such a theory. The results of this research provide not only a fascinating insight into the structure of language but demonstrate that Hjelmslev actually anticipated a novel way of thinking about models and computation, which was far ahead of his time.

Hjelmslev's theory from the very axiom always involves branching. There is never a single thing existing univocally, but it is always represented as at least two faces of the same coin. This is conceptually very similar to what we can observe with communication models that stratify their problem on at least two levels of the hypostasis (this can even be traced to Riemann, and his distinctions between local and global, metric and topological, etc.). The idea is that a problem essentially exists on multiple levels at the same time, and that none of them represents an "original domain" of a problem. What is important is the possibility of translating between one level to another, and this can be achieved only in the case of a structural preserving transformation, known in category theory as a natural transformation.

An important ambition of the prolegomena was to demonstrate that the nature of language is algebraic. In the last part of his book Hjelmslev algebraically manipulates elements of the sign function, introducing different architectures of semiotic structures.

2014-2015

Nikola Marincic

marincic@arch.ethz.ch

FROM SIMULATION TO SYNTHESIS IN ARCHITECTURE - MODELING AND SYNTHESIZING ARCHITECTURAL SIMULATIONS WITH DATA-DRIVEN COMPUTATIONAL MACHINES

Computer simulation is an applied methodology that describes and studies the behavior of systems using mathematical or symbolic models on computers. In architecture, simulation techniques have been widely adopted in design practices, allowing design proposals to be evaluated and investigated from multiple perspectives. Despite the fact that the applications of simulation techniques are various and have achieved promising results, computer simulation is still considered an “expensive” method as the demanded computational time is too lengthy for application in design practices. Also, researchers have realized that complex phenomena such as the dynamic process of urban systems are difficult to formulate as simulation models. Adequate models are essential for simulations. Further, the modeling relies on the hierarchy of specifically selected properties, which face fundamental limits such as combinatorial explosion.

Early research on artificial intelligence (AI) faced similar challenges, where researchers attempted to encapsulate and replicate the human decision process via logic-based rules, which eventually failed due to the numerous exceptions of causal relations that exceed human capacity. Recent AI applications, on the other hand, use the same machine / structure (e.g. artificial neural networks) to solve different sets of problems by doing numerical approximations (training) on the corresponding data. Preconceived structures of knowledge are no longer necessary for designing models, and therefore recent AI overcomes these limitations outperforming early AI.

The recent successes in AI motivates this research to address the simulation tasks of architecture in a similar way: Instead of following mathematical equations and doing step-by-step simulation, we can make numerical approximations from data and generate the results directly (synthesis). Following this idea, the research intends to apply the same data-driven methodology in different applications including urban flood prediction, structural simulation and optimization, and urban traffic prediction. As the data-driven machines produce results in constant time, these applications can be made on a much higher resolution or in a much larger sample-areas than what can be achieved by using conventional simulation techniques.

2018-2019

Vahid Moosavi, Guo Zifeng, João P. Leitão (EAWAG), Nuno E. Simões (University of Coimbra)

guo@arch.ethz.ch

EVENT PROTOCOLS: APPLICATION OF MACHINE INTELLIGENCE IN DISASTER RESPONSE

Due to natural disasters, thousands of human and animal lives are lost every year around the world – these disasters also significantly impact the economy, infrastructure, and availability of resources. After natural disasters, two processes occur as a human reaction: on one hand we produce vast amounts of data in social media, news, satellite and drone imagery, and through the ubiquitous work of NGOs; on the other hand, surveys are conducted using state-of-the-art technologies for disaster response. However, data and knowledge are not commonly used in the scenario of an ongoing event as they arrive too late to influence decision making. So, if we have plenty of data and tools to learn from that data, how can we have a more clear, agile, and versatile response to natural disasters? Moreover, if we already have good practices in disaster response, how can learning from previous experiences help us to tackle specific disaster scenarios?

To acknowledge the above questions, the research aims to produce a mediality — a map of events— constructed by information sharing processes that can adapt to many users and their various modalities of usage. The map of events reconstructs events (of the past ten years) from the aforementioned big data sets on natural disaster using the general notion of dimensionality and applies machine intelligence to find correlations within them in order to cluster them accordingly. When a new event enters the map, it transforms the map into a model —event protocol. Event protocol creates a specific arrangement of events concerning the characteristics of the new event, and it produces a spectrum of probable assessments depending on the questions the user has. The map of events and event protocols integrate machine intelligence with intelligent humans to produce a clear, agile, and versatile disaster response.

As a result, this research produces a mock-up that adapts to many users and their various modalities of usage to facilitate individual responses to natural disasters. The produced mock-up contains a compilation of big data (from resources focusing on architectonic applications for disaster response) and can provide specific articulations depending on the user's concerns. Particularly, this research validates its methodology on three experiments dealing with urban scale. Each task is taken as a case study exploring the early stages of disaster response. One example deals with rapid building safety assessments, the second example works with spatial planning and built environment, and the last case deals with policy recommendations. To conclude, architects have been involved in temporary, semi, and permanent housing. However, I am proposing that architects should also be included in the early stages of disaster response, changing the architect's role from designing unique artifacts to another, curating a massive set of data, and deriving architectural interventions.

Karla Saldaña Ochoa

saldana@arch.ethz.ch

BEYOND TYPOLOGIES, BEYOND OPTIMIZATION: EXPLORING NOVEL STRUCTURAL FORMS AT THE INTERFACE OF HUMAN AND MACHINE INTELLIGENCE

This research presents a computer-aided design framework for the generation of non-standard structural forms in static equilibrium that takes advantage of the interaction between human and machine intelligence. The design framework relies on the implementation of a series of operations (generation, clustering, evaluation, selection, and regeneration) that allow to create multiple design options and to navigate in the design space according to objective and subjective criteria defined by the human designer. Through the interaction between human and machine intelligence, the machine can learn the nonlinear correlation between the design inputs and the design outputs preferred by the human designer and generate new options by itself. In addition, the machine can provide insights into the structural performance of the generated structural forms. Within the proposed framework, three main algorithms are used: Combinatorial Equilibrium Modeling for generating of structural forms in static equilibrium as design options, Self-Organizing Map for clustering the generated design options, and Gradient-Boosted Trees for classifying the design options. These algorithms are combined with the ability of human designers to evaluate non-quantifiable aspects of the design. To test the proposed framework in a real-world design scenario, the design of a stadium roof is presented as a case study.

Link to publication

2019-2020

Karla Saldana Ochoa, Patrick Ole Ohlbrock, Pierluigi D’Acunto, Vahid Moosavi

saldana@arch.ethz.ch

ENHANCING DISASTER RESPONSE WITH ARCHITECTONIC CAPABILITIES BY LEVERAGING MACHINE AND HUMAN INTELLIGENCE INTERPLAY

Disaster response presents the current situation, creates a summary of known information on the disaster, and sets the path for recovery and reconstruction. During the last ten years, various disciplines have investigated disaster response in a two-fold manner. First, researchers published several studies using state of the art technologies for disaster response. Second, humanitarian organizations produced numerous protocols on how to respond to natural disasters. The former suggests questioning: If we have developed a considerable amount of studies to respond to a natural disaster, how to cross-validate its results with NGOs' protocols to enhance the involvement of specific disciplines in disaster response?

To address the above question, the research proposes an experiment that considers both: knowledge produced in the form of 8364 abstracts of academic writing on the field of Disaster Response and 1930 humanitarian organizations' mission statements indexed online. The experiment uses Artificial Intelligence in the form of Neural Network to perform the task of word embedding –Word2Vec– and an unsupervised machine learning algorithm for clustering –Self Organizing Maps. Finally, it employs Human Intelligence for the selection of information and decision making. The result is a mockup that will suggest actions and tools that are relevant to a specific scenario forecasting the involvement of architects in Disaster Response.

Link to publication

Karla Saldaña Ochoa

saldana@arch.ethz.ch

ARCHITECTURE MEDIATING INFORMATION TECHNOLOGY

(SNF project funding; 2017~2020)

This basic research departs from looking at the phenomena of media architecture from a broad infrastructural context, that is, “architecture that mediates information technology”. Recent media architecture projects do not merely apply information technology but present distinct manners of characterizing it. This research builds on the extensive work published in: < Atlas of Fantastic Infrastructures: an intimate look at media architecture > (Mihye An, Applied Virtuality Book Series vol. 9, Birkhäuser, 2016).

The current research explores the mediality of such architectures not only from the phenomenological and technological interests, but particularly from an architectonic perspective. It takes a closer look at the double forces of the same leverage: on one hand we have infrastructural capacities enabled by new technics and technologies, on the other there is our intimate—at times massive—infusing of fantasies and imagination. Regarding the former a particular attention is devoted to understanding any technical activity in relation to nature and works with a tentatively framed concept of naturing. Nature here, not understood as something pre-given or pure, the idea of naturing aims to attend to the constant crafting of new ordering(s) on a multiplicity of dimensions. The latter then concerns our affairi-cal capacity and inventions, which in principle have to do with our ability to live in the abstract. But broadly speaking, it includes all kinds of metaphysical sensibility that cast a certain imagination on what we could consider as “natural world”. The two—naturing and affairi-cal capacity—are nevertheless often so tightly entangled, hence, the research brings the idea of naturing affairs as the key theoretical frame in delving into the relation between architecture and information technology today.

Consequently, the project will initiate a vivid conversation between architects, theorists, and artists, in order to test out the aforementioned concepts. A symposium, tentatively titled < Architecture and Naturing Affairs > will be organized in early 2020. The final outcome will be a book publication and a website, in which a spectrum of architectonic concepts will be elaborated.

2017-2020

Mihye An

an@arch.ethz.ch

PLANETARY URBAN MODELING: MODELING DIFFERENT URBAN PHENOMENA AT THE SCALE OF PLANET

The world population is moving to urban environments and managing this urbanization process is a global challenge. With the recent computational advancements in geo-information processing and machine learning today we have a very active ecosystem of public and commercial data providers who have eventually “indexed” our planet into a query-able database. Our main hypothesis is that these great technological opportunities open up a radically new way of thinking about our urban phenomena at the single unit of planet without loosing the spatial resolutions, without imposing a generic urban model, while being able to find better answers for our “local” challenges. Looking at urban phenomena at the scale of planet, not only solves the issue of arbitrariness of the physical borders (e.g. border of a city or a region), but also brings new opportunities to look at the whole urbanization process as a whole, learn from existing best practices in urban development and also to develop scalable computational methods which are addressing unique problems such as urban air pollution, heat islands, urban density, urban flood, urban economy, etc.

Vahid Moosavi

svm@arch.ethz.ch

ATLAS OF INDEXICAL CITIES: ARTICULATING PERSONAL CITY MODELS ON GENERIC INFRASTRUCTURAL GROUND

How do we move through cities if we have access to all the cities and places of our world? And if any place is accessible can we consider a local that is informed by the global and a global that knows any local? Can we grasp the atmospheric and ritual qualities of these places? Where should we go if we like temples and gods? Can these indexes tell us a story about an event in Kyoto? And how would it look like in Berlin? And can we find Berlinness in Mumbai then? Can we “remember” places we don’t know of yet by assuming, like in some sort of anamnesis, our own experience as learning substrate?

Pervasive mobile computing, abundant available urban data and established ideas of Quantum Physics have become our familiar contemporary landscape. This decentralizing dynamic invokes a situation where not only the number of infrastructural artefacts exceeds the number of users, but also have an influence on a global planetary scale. Can we still call the former objects and the people who use them subjects? Who is observing and what is being observed? How are the local and the global entangled? Considering the Observer Effect — where the mere observation of a phenomenon inevitably changes that phenomenon — the role of the observer seems to be widely absent in urban theory, as it remains grounded in the production of generic systems that operate with specific data, under the assumption of a pre-existent general logic to cities.

Yet, what happens when we invert this setup and assume that personal (and even ephemeral) city models can be articulated by an active observer (the citizen) and enacted with generic data as a substrate for infrastructures? Can generic infrastructures be informed as personalized instruments by and for an observer? To illustrate this question, we will dive in an adventurous attempt to a personal search engine for the planet. This navigation instrument, proper to our time, will expose us to familiarity and otherness simultaneously: It will learn from what we know and project it on what is yet-to-know, turning it into something that can be “re-membered”, placed together again. While orchestrating large Instagram and satellite images datasets, we will navigate from one query to another with a transient yet specific orientation, projecting personal preferences on a world that circulates around us.

Diana Alvarez-Marin

alvarez@arch.ethz.ch

CHARACTERS WITHOUT SCRIPTS: HOW ALICE DEALS WITH A LOT

Alice is one of my avatars.

She comes from the plenty; she comes from the wonderland. She is an avatar, a bot, an alien; she is both me and not me; we are related but she is independent and dependent on me. She deals with a lot, with different data streams and abundance of objects images and text. The story of Alice renders how by playing with my personal libraries, I can find consistencies in the infinite flows of data and bring them together in synthetic characters. Alice is one of those characters; a letter and a persona at once.

Alice is very close to the way brands behave today. She is implicit and atmospheric. Alice is not perfect. There are a lot of open questions around her, but I think she can tell an interesting story of how to play with a lot of data.

Since she is my avatar, like me, she is interested in architecture and information. She talks about them by potentially playing with all the images and texts. In this play the paradoxes of working with a lot of data become apparent.

If data is big enough, it will not tell us the truth but will show us the world we want to see.

In this kind of setup, how do we talk about things we care for? How to write if I have access to all the books in the world, how to take or work with pictures when they are omnipresent and overflowing? One possible approach to these phenomena comes from thinking of coding as a kind of a literacy.

This is a story of how Alice became literate in coding. Currently she has a voice on Twitter.

2015 -

Miro Roman

roman@arch.ethz.ch

ask.alice-ch3n81.net

twitter.com/Alice_ch_n3e81

FAST SYNTHESIS OF RESIDENTIAL FLOOR PLANS BY MACHINE LEARNING

What could the interplay between machine learning and architectural design process be if we managed to integrate them to develop probabilistic design spaces? If any function, zone, and element can be related and connected; can we design by learning from data? Can we step out of the objective loop of parametric & computational designs and enter a quantum designs era? How can we invent if we can design big projects in milliseconds? How can we design where there are no problems to solve and we are able to leverage the knowledge of vast amount of great architectural designs? And can we integrate physical and non-physical elements of spaces in one coherent informational space?

By establishing the integration of machine learning and design process, a new kind of design space emerges where the function of any element or zone can be related. What kind of abstract questions should architects learn to ask? Can architects free themselves from looking at design as a way of solving spatial problems and look at design as a fast navigation process within probabilistic design-spaces where all of the problems are already solved?

To step out of the dilemma of todays’ generative optimized designs (the goal to perform better and faster) we should rather focus on extracting hidden heuristic patterns, embedded knowledge and qualities from floor plans. This will intuitively give us a fast performance with qualitative probabilistic designs.

This research presents a way for extracting the hidden qualities embedded in floor-plans data. Thus, we will be able to build probabilistic multiverse patterns of any residential floor plans which allows any floor plan to morph into any other: a probabilistic space of many positions and relation augmented by machine learning.

April 2019 - December 2020

Mohamed Zaghloug

in collaboration with Diplan

zaghloul@arch.ethz.ch

PANORAMA OF CONCEPTS: A SURVEY ON THE MAIN IDEAS THAT KEEP EVOLVING AND REVOLVING WITHIN THE DOMAIN OF ARCHITECTURE

Architecture is challenged between the optimization of building processes and the reduction to add some individuality to the generic design. The question what architecture actually is - besides engineering and management – becomes relevant when specialization takes command:

On one hand Alberti claimed that the architect is a person who learned by means of a definite and admirable plan to determine – in thought and feeling, as well as to carry out in practice – what best suits the most outstanding human needs.

On the other hand, Semper criticized that we want art to create art, but we are given numbers and rules. That we want something new, but we are given something, which is even more remote from the requirements of our time.

In recent years the discipline of architecture seems to be prone to subjugate to certain temporary buzzwords, which has a danger to produce simple solutions according to popular ideologies. However, architecture is one of the few professions, which are able to operate on a meta-level: Making references to mathematics, arts, management and social sciences which offers an opportunity to maintain a generalist overview beyond immersing into strictly systemic algorithmic approaches.

Based on the hypothesis that a “faculty of thinking” (which architecture presumably is – rather than a discipline) is defined by certain invariants, this project goes through the writings of the past masters of the discipline to find those concepts that keep re-appearing throughout the changing paradigms. Assuming that we can only learn from the past since architecture has always been challenged by advancing technologies, the path is laid out. When machine intelligence can be trained to generate solutions, then the human intellect is challenged to formulate questions.

Dennis Lagemann

lagemann@arch.ethz.ch

LAWRENCE AND THE GLEAMY CLOUD

This venture questions modes of valuation and representation in the world of food. What is food? Where does it come from? How is it made? What does it do? How does it taste? So far, formal methods provide analytical mechanisms that which shall allow to cope with the complexities and liveliness at stake and provide stable answers. The in experimental setups derived and generalized values, parameters and classifications aim to represent efficacious activities throughout the course of adding up the sum to a whole — a product of food. A simplified representation is formed and set and shall allow for the control of that which can be valued and known and thereby guarantee the future successful and safe processing and replication of food. Can we blow up these chains and prepare a ground that which might allow us to integrate anything that which we like to be related to food and thereupon formulate a space within which we can actively and consistently write and cultivate food? We would like to follow this desire and make whatever food might bear graspable.

In order to do so, we invert the manners of valuation and representation and start with an immense product of givens. A novel ground that comprises various collections of data — from analytical food data such as food compounds and health effects up to consumer food reviews. A data-driven model opens up channels that allow for a communication between those various different domains. The model formulates a network of conductive characters. Transformations attempt to keep the structure of each character and look for similarities and differences throughout the domains. This results in a probabilistic cloud of indexes that is covered up by spectra that bear the worth of that which we might call and derive as food from there.

The plenty hosts an infinite amount of symbolic originalities. We can make those graspable and applicable alongside the characters of an alphabet. They characters are indexing the plenty of meanings, experiences and measurements yet kept as a secret. Applications might show us potential relations such as between a certain food species, a food compound with a certain impact on health and a community of consumers, and vice versa. They allow for multiple readings and writings and hence enable to derive values. These theoretical statements might initially do not make sense but can guide practices and experiments in order to proof the indexical talks correctness.

2018

David Schildberger

schildberger@arch.ethz.ch

DESIGN & ARCHITECTURAL MODELLING WITH NEURAL POTENTIALS

This research proposes contributions on the progressive generation of design and architectural features through the decoding/encoding of discriminative neural patterns from humans, and the implementation of neurofeedbacks, gained through an Electro-Encephalography (EEG)-based brain-computer interface (BCI), and rapid serial visual presentations techniques, for the active modulation of implemented generative models.

While the built environment is indisputably aggregating and modulating a wide range of stimuli from the world, it necessarily encroaches on physiological and psychological states. The research is led towards inverting the concern and asks what such states can provide in the computational modelling of design and architectural artefacts. By opening up to this extra-disciplinary corpus of knowledge a novel way to approach architectural modelling (and its variance or spectrum in outputs) can be sought through the notion of Design Beliefs (bayesian models of information processing) rather than Design Grammars (models of formal logics) widely used in generative approaches of Computer-Aided Architectural Design today.

Both theoretically and technically the research focuses on the development of design and architectural modeling strategies involving the real-time coupling of both Human Intelligence (HI) and Machine Intelligence —or Machine Learning (ML)— in a mixed computational closed-loop. Borrowing from connectionist methods of artificial learning and symbolist methods of visual working memory, the developed mixed model makes a generalized use of HI for discriminative capacities, and ML as a perpetual generator of new potential design solutions and aggregates to explore.

2016

Pierre Cutellic

cutellic@arch.ethz.ch

SPECTRAL CHARACTERIZATION AND MODELLING WITH CONJUGATE SYMBOLIC DOMAINS

The aim of this project is to challenge computational models in architecture with contemporary modelling approaches that regard computation from the perspective of communication between different domains of a problem. The concept of communication generalizes the modelling approaches characteristic for contemporary physics and can be well illustrated with the distinction between two major scientific modelling paradigms, one crystallizing and another emerging at the turn of the 20th century.

The set-theoretic paradigm of the 19th century, formalized by Georg Cantor reinforced the intuitive assumption about objects as positive, sharply defined and naturally distinguishable entities, embedded in space and indexed by time. Modelling within it relies on creating structures on top of sets. It proceeds from the notion of an empty set, into which the elements are introduced by the notion of membership. At any stage of this procedure, the sets are totally formed objects.

This view could not be sustained in the quantum paradigm characteristic of the 20th century. Objects in the quantum paradigm are not sharply defined; there is no space in which they can be located; and no causal relations could explain their behavior. The whole observable domain is in a “fuzzy” state. In it an object can be thought of as a temporal foam, spectrally emerging from indistinguishability.

Thinking about objects in quantum physics indicates a partition point of view that assumes completely different initial conditions in terms of modelling. It begins with the assumption that no objects can be fundamentally distinguished. In order to discriminate anything from this state and create information, the partition paradigm requires models that can act as communication bridges between at least two levels into which the problem can be stratified. The application of bridges to an object yields a partition spectrum, which serves as the basis for defining objects. The spectrum can be further refined until a satisfactory approximation of an object is reached. Only at this point can that which is actually distinguished be represented in terms of a set.

The natural communication model, created by Dr Elias Zafiris, is a mathematical framework that formalizes such modelling procedure. Zafiris derives the notion of an object spectrally on the basis of a partition procedure applicable to the modelling of quantum phenomena, formalizing it within the category theory—an abstract part of pure mathematics.

My contribution to the project was in showing that the partition-based modelling in the natural communication model could be computationally implemented by means of machine learning. For that purpose, I devised the self-organizing model, a computational framework that establishes coexistence between different levels of the model, allowing for communication.

In the final step, the self-organizing model is applied in an experiment that addresses the question of how similarity between spaces in architecture could be accounted for within a paradigm of communication on the basis of their architectural representation. The hypothesis of the experiment was that the question of similarity in the object's own terms cannot be adequately posed within the established computational models in architecture, due to their reliance on a pre-given notion of space in which the architectural representations are submerged. Being able to speak about the similarity of spaces requires addressing the problem outside of the given space of representation, finding or constructing another level of representation of the same phenomena, where the question can be posed and answered once the coexistence between two levels is established. By applying partition and generalization procedures of the self-organizing model to a large number of floor plan images, a finite collection of elementary geometric expressions was extracted, and a symbol attached to each instance. This collection of symbols is regarded as the alphabet, and any floor plan created by the same conventions, can thereby be defined on this symbolic basis, as the writing of that alphabet. Finally, by employing Markov chains, each floor plan is represented as a chain of probabilities, based upon its individual alphabetic expression of a written language. The probability values characterizing each floor plan as a writing in a common alphabet are then used to compute similarities between different floor plans.

2015-2017

Nikola Marincic

marincic@arch.ethz.ch

PANORAMAS OF CINEMA

This research deals with the construction of panoramas of cinema and the qualification of spaces in architecture via code.

The availability of videos in large quantities online, coupled with computer code to deal with them all at once, present a promising scenario for architecture, as videos are alive and talking from many times and spaces. This research explores this scenario by operating millions of clips, frames and dialogues extracted from a vidéothèque, and constructing rich panoramas with them. This work thinks of the qualification of space beyond a panorama, as it comes from a position, has a direction and follows a personal code. Panoramas of Cinema looks into different media so to establish communication between a panorama and an architect.

The advancements of this research are shared with the students of the Department of Architecture at ETH as optional courses, and with students from other educational institutions across Europe in other formats—e.g. TU Vienna, UdK Berlin, Aalto University Helsinki. Panoramas of Cinema is being featured in the XII International Architecture Biennale of São Paulo.

2019 -

Jorge Orozco

orozco@arch.ethz.ch

SELF-ORGANIZATION-DRIVEN MODELLING

The most prominent machine learning algorithms have always been about supervised learning. Their aim is to learn the function that maps the input, whatever it is, to a pre-specified output, so when the computer encounters a previously unseen data instance it can effectively predict what the output should be. This mode of operation can be very useful in addressing a large number of problems, but has a limited potential in design, where the outputs are not given in advance but rather left open, to emerge and crystallize. For such kind of processes, unsupervised learning models showed higher potential. This research project explored the algorithms involving self-organization processes, that are usually classified under unsupervised learning. Such algorithms are: a self-organizing map (more details in the attached paper "An Instrument for Communication: Self-organizing Model"), neural gas, PageRank and others.

The contribution of my work between 2012–14 was in rethinking the methodology of working with self-organizing maps by bypassing the feature engineering part. It stems from quite different assumptions in how phenomena can be represented.

An act of measuring always involves a mapping from one field of values to another. From a mathematical standpoint, the set of all the observations we call data needs to be mapped into a real number space. Topology of this space can be seen as geometric spectrum of this algebra. This means that each measurement provides a topological space inherent to the measured phenomena itself. My modelling approach takes advantage of this idea: instead of using a single number to represent a feature, it represents it by means of a geometric spectrum of observations.

In this approach to modelling, one need not be too specific (in set-theoretic sense) in describing the modelled phenomenon. The important part is that the phenomenon must allow to be measured and numerically characterized. This requires a number of measuring instruments, which should be thought of abstractly—they can be both, physical measuring instruments such are for example sensors, or completely virtual devices, as long as they can map their output into the real number field. If each of these instruments is sampled in intervals, which can be time intervals, or any kind of discrete (spatial, harmonic) unfolding, each instrument forms a channel of measurements. A single sample from each of the channels at a given time (or unfolding) is concatenated into a vector, and a large number of vectors taken as the input of a self-organizing map. In this setup, the role of the self-organizing algorithm is to extract the characteristic features of this higher-order algebraic spectrum. Now, the clusters registered on top of the lattice are actually characteristic features of the object. More importantly, the map becomes a computational abstraction of the original phenomenon, whatever the phenomenon is.

2012-2014

Nikola Marincic

marincic@arch.ethz.ch

CAST OFF - CHOCOLATE FROM CACAO CELL CULTURES

This venture questions the seemingly inevitable sourcing of food as a resource from nature. The relationship of the industrial agriculture to nature leaves striking marks in our habitat on the planet earth. Is it thus possible to leave behind this predominant system, that operates on a limited ground with the primarily means of an optimized re-production, and rethink the cultivation of food without relating its origin to this meanwhile naturalized ground and instead prepare a substrate from which we can cast off novel, rich and digestible natures?

In search of possible approaches to recultivate the relationship between man and nature, we have found a promising potential in the cell culture technique. This technique allows the growth and cultivation of cells of any species in a bioreactor outside of their natural environment. The technique prepares a generic ground that engages with nature on a local level. It unlocks chance and allows for balances that can be cultivated and contracted. The bioreactor becomes a host for novel characters of food.

In the context of Switzerland, we began to think about the cultivation of cacao for the production of chocolate. So far, we have been able to discover the great potential of a technique that detaches cacao from its current context and allows for an ad infinitum cultivation. The technique unlocks new expressions of the natural aroma spectra inherent in cacao and thereby enables the further luxuriation of cacao and chocolate. This circular endeavor rewards us with ever new articulations — a luxuriation of nature in a manner that which we would call sustainable as it makes us also the host — not mere the guest in nature.

2015 -

David Schildberger, Philipp Meier, Raphael Gnehm, Rüdiger Maschke, Irene Stutz, Vasilisa Pedan, Ansgar Schlüter, Karin Chatelain, Irene Chetschik, Dieter Eibl, Tilo Hühn, Regine Eibl

schildberger@arch.ethz.ch

URBAN MORPHOLOGY MEETS DEEP LEARNING: A COMPARATIVE STUDY OF URBAN FORMS IN 1.1 MILLION CITIES ACROSS THE PLANET

Most of the studies on urban form and urban morphology are based on very few observations. On the other hand, availability of large spatial data sets across the planet such as Open Street Map (OSM) offers a new opportunity for the study of urban development patterns.

In this work, with the use of deep neural networks (specifically, deep convolutional auto-encoders) from computer vision, we automatically compare and identify the main patterns of urban forms via images of the street network of these 1.1 million locations, all taken at the same spatial scale. After training the model, one can visually find similar patterns of development to any selected area.

Next step: How cities can learn from each other? Building a search engine of development patterns for spatial planners

At the moment, in addition to spatial form we are going to simultaneously consider functional use and other urban quality indicators including economy, health, environment, and transportation. US community surveys with information of more than 60K locations (census tracts) provide an available Big Data set to train multimodal machine learning algorithms. The final results can be represented as easy-to-browse interfaces for urban design and spatial development researchers.